Cybercriminals Are Targeting AI Agents and Conversational Platforms: Emerging Risks for Businesses and Consumers

Cyber Threat Intelligence

Intro

Resecurity has identified a spike in malicious campaigns targeting AI agents and Conversational AI platforms that leverage chatbots to provide automated, human-like interactions for consumers. Conversational AI platforms are designed to facilitate natural interactions between humans and machines using technologies like Natural Language Processing (NLP) and Machine Learning (ML). These platforms enable applications such as chatbots and virtual agents to engage in meaningful conversations, making them valuable tools across various industries.

Chatbots are a fundamental part of conversational AI platforms, designed to simulate human conversations and enhance user experiences. Such components could be interpreted as a subclass of AI agents responsible for orchestrating the communication workflow between the end user (consumer) and the AI. Financial institutions (FIs) are widely implementing such technologies to accelerate customer support and internal workflows, which may also trigger compliance and supply chain risks. Many of such services are not fully transparent regarding data protection and data retention in place, operating as a 'black box', associated risks are not immediately visible. That may explain why major tech companies restrict employee access to similar AI tools, particularly those provided by external sources, due to concerns that these services could take advantage of potentially proprietary data submitted to them.

The leading AI innovators including OpenAI, Microsoft, Google, and Amazon Web Services (AWS) are heavily promoting the use of AI Agents so businesses can use AI to get their jobs done, and increase productivity and revenue opportunities. Many existing products are also poised to enhance their generative AI features with increased automation - which may highlight the increasing role of conversational platforms and AI chatbots.

Unlike traditional chatbots, conversational AI chatbots can offer personalized tips and recommendations based on user interactions. This capability enhances the user experience by providing tailored responses that meet individual needs. Bots can collect valuable data from user interactions, which can be analyzed to gain insights into customer preferences and behaviors. This information can inform business strategies and improve service offerings.

At the same time, it creates a significant risk in data protection, as the collected data from users may reveal sensitive information and context due to personalized interactions. Another important aspect is whether the collected user input will be kept for further training and whether such data will later be sanitized to minimize the disclosure of PII (Personally Identifiable Information) and other data that may affect user privacy in case of a breach.

Understanding Conversational AI vs. Generative AI

Communications AI, often referred to as Conversational AI, is primarily designed to facilitate two-way interactions with users. It focuses on understanding and processing human language to generate human-like responses. This technology is commonly used in chatbots, virtual assistants, and customer service applications, where the goal is to provide meaningful and contextually relevant interactions.

On the other hand, Generative AI is centered around creating the new content based on learned patterns from existing data. This includes generating text, images, music, and other forms of media. Generative AI is not limited to conversation; it can produce original works autonomously when prompted, making it suitable for applications in creative fields, content creation, and more.

Key Differences

Purpose:

- Conversational AI aims to engage users in dialogue and provide responses that mimic human conversation.

- Generative AI focuses on producing new content, such as writing articles, creating artwork, or composing music.

Functionality:

- Conversational AI processes input from users and generates appropriate responses, often relying on predefined scripts or learned conversational patterns.

- Generative AI analyzes data to create entirely new outputs, leveraging complex algorithms to innovate rather than just respond.

In summary, while both technologies utilize AI principles, Conversational AI is about interaction and dialogue, whereas Generative AI is about content creation and innovation.

Conversational AI systems are becoming increasingly prevalent across various industries and applications. Here are some notable examples:

Virtual Assistants

- Siri (Apple), Alexa (Amazon), Google Assistant (Google) - These voice-activated virtual assistants use conversational AI to understand natural language and provide responses to user queries.

AI-powered Chatbots

- Chatbots on e-commerce websites - These AI-powered chatbots assist customers with product information, order tracking, and other inquiries.

- Messaging app chatbots - Chatbots integrated into messaging platforms like Slack, Facebook Messenger, and WhatsApp to provide automated customer support and information.

Voice-Activated Bots

- Voice-to-text dictation tools - Conversational AI systems that can transcribe spoken language into text, enabling hands-free communication.

- Voice-activated smart home devices - Examples include Amazon Alexa, Google Nest, and Apple HomePod, which use conversational AI to control smart home functions.

Enterprise Applications

- Automated customer service agents - AI-powered chatbots and virtual agents that handle common customer inquiries and tasks, such as billing, account management, and troubleshooting.

- HR onboarding assistants - Conversational AI systems that can guide new employees through the onboarding process, answering questions and providing information.

Healthcare Applications

- Virtual nursing assistants - Conversational AI systems that can engage with patients, provide medical advice, and assist with appointment scheduling and medication management.

These examples show the diverse applications of conversational AI, ranging from consumer-facing virtual assistants to enterprise-level automation and support systems. As the technology continues to evolve, we can expect to see even more innovative use cases for conversational AI in the future.

Emerging Risks from AI Agents and Conversational AI

In a recent publication by Avivah Litan, Distinguished VP Analyst at Gartner, there were highlighted emerging risks and security threats introduced by AI agents:

- Data Exposure or Exfiltration

Risks can occur anywhere along the chain of agent events.

- System Resource Consumption

Uncontrolled agent executions and interactions—whether benign or malicious—can lead to denial of service or denial of wallet scenarios, overloading system resources.

- Unauthorized or Malicious Activities

Autonomous agents may perform unintended actions, including “agent hijacking” by malicious processes or humans.

- Coding Logic Errors

Unauthorized, unintended, or malicious coding errors by AI agents can result in data breaches or other threats.

- Supply Chain Risk

Using libraries or code from third-party sites can introduce malware that targets both non-AI and AI environments.

- Access Management Abuse

Embedding developer credentials into an agent’s logic, especially in low- or no-code development, can lead to significant access management risks.

- Propagation of Malicious Code

Automated agent processing and retrieval-augmented generation (RAG) poisoning can trigger malicious actions.

These risks highlight the importance of designing robust controls to mitigate data exposure, resource consumption, and unauthorized activities.

Conversational AI, while offering significant benefits in user interaction and automation, also presents several risks that need to be carefully managed. Such systems often handle sensitive user information, raising concerns about breaches of confidentiality and data exposure.

For example, when interacting with a Conversational AI system, the end user may request updates on the latest payment confirmation, ask about delivery status by sharing their address, and provide other personal information to receive appropriate output. If this information is compromised, it could lead to a significant data leak.

Dark Web Activity

One of the critical categories of Conversational AI platforms is AI-powered Call Center Software and Customer Experience Suites. Such solutions utilize purpose-built chatbots to interact with consumers by processing their input and generating meaningful insights. Implementing AI-powered solutions like these is especially significant in fintech, e-commerce, and e-government - where the number of end consumers is substantial and the volume of information to be processed makes manual human interaction nearly impossible, or at least commercially and practically ineffective. Trained AI models optimize feedback to consumers and assist with further requests, reducing response times and human-intensive procedures that could be addressed by AI.

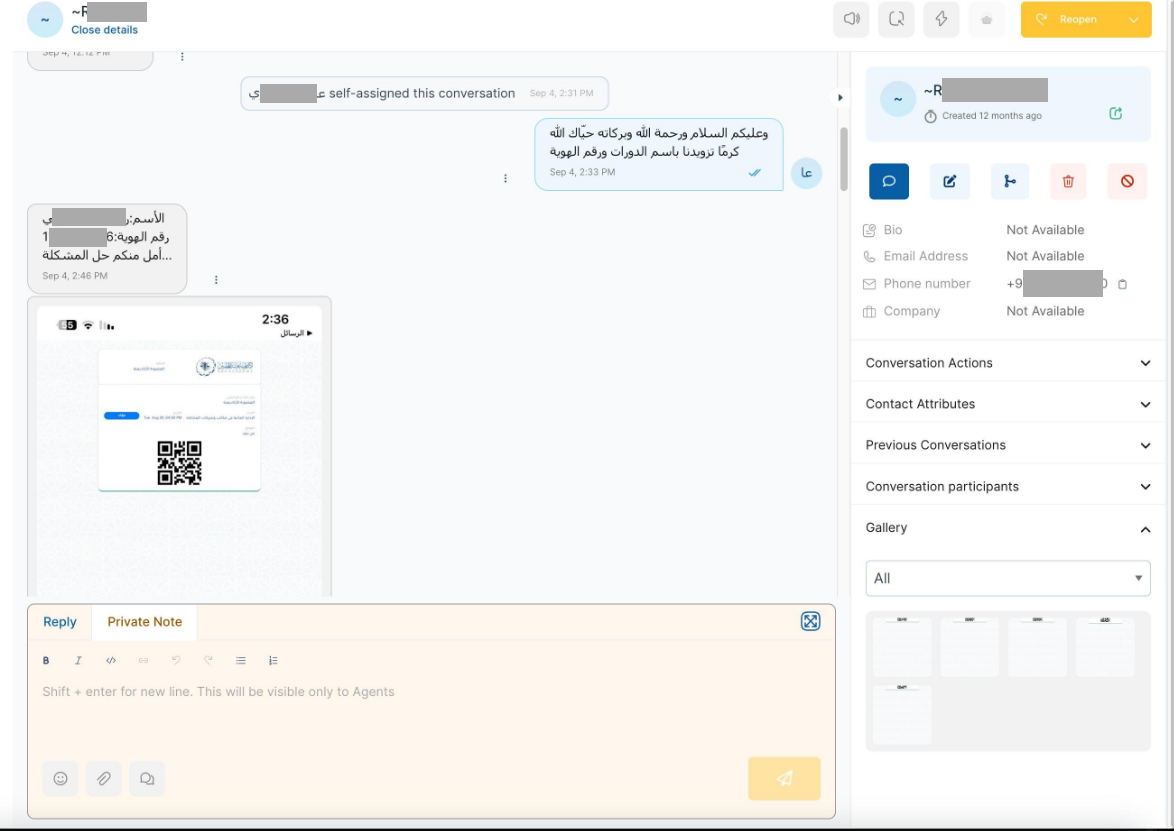

October 8, 2024 - Resecurity identified a posting on the Dark Web related to the monetization of stolen data from one of the major AI-powered cloud call center solutions in Middle East.

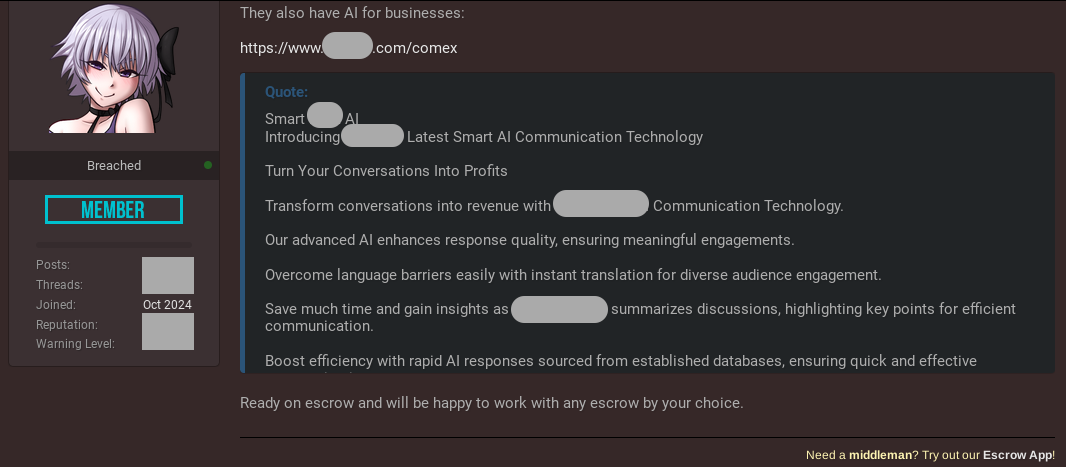

The threat actor gained unauthorized access to the management dashboard of the platform, which contains over 10,210,800 conversations between consumers, operators and AI agents (bots). Stolen data could be used to orchestrate advanced fraudulent activities and social engineering campaigns, as well as other cybercriminal tactics using AI. The incident has been detected in a timely manner and successfully mitigated by alerting the affected party and collaborating with law enforcement organizations. The unfortunate consequence is that bad actors stole a massive amount of information creating privacy risks for consumers. Based on available Human Intelligence (HUMINT), Resecurity has acquired additional artifacts related to the incident from the actor:

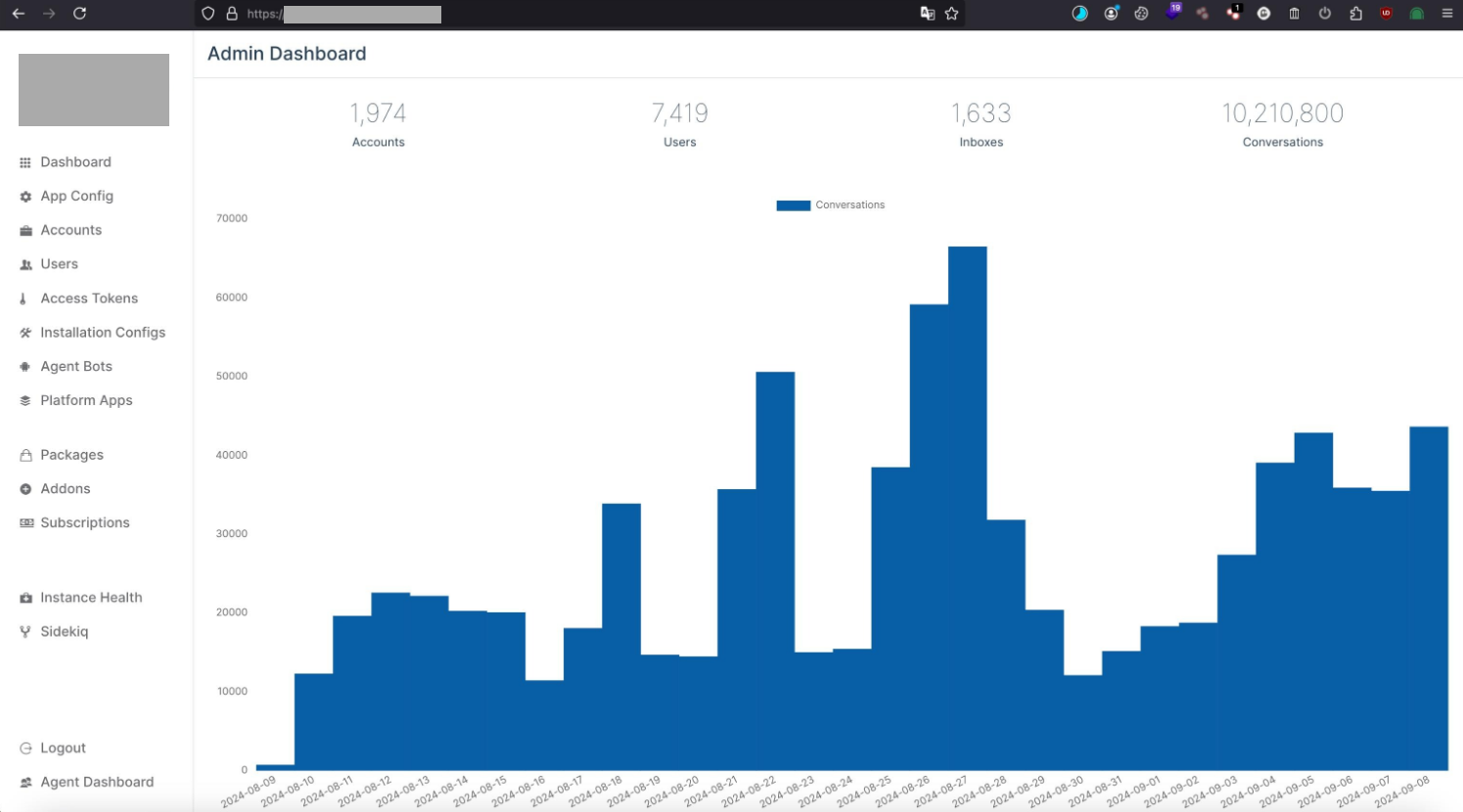

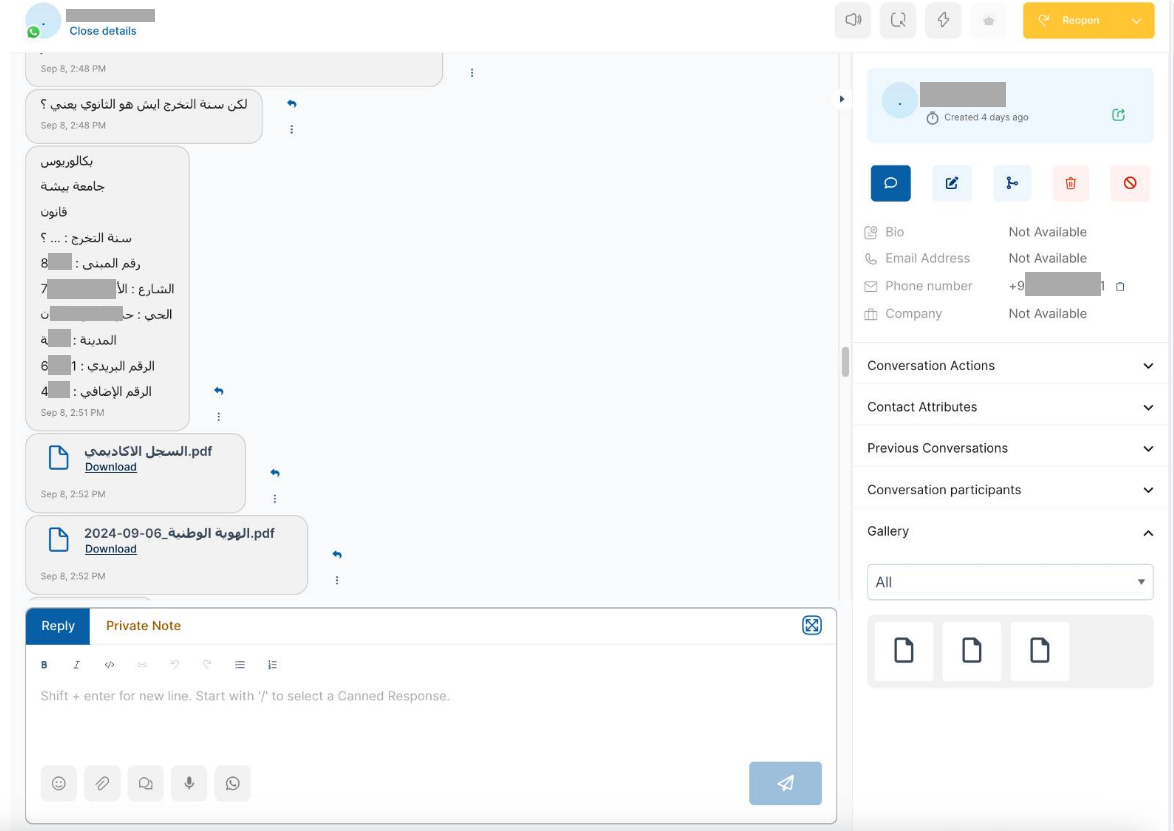

One of the significant impacts of the identified malicious activity - breached communications between AI agents and consumers revealed personally identifiable information (PII), including national ID documents and other sensitive details provided to address specific requests. The adversary could apply data mining and extraction techniques to acquire records of interest and use them in advanced phishing scenarios and other cyber offensive purposes.

Trust in the AI Platform: Precursor of Data Leak

As a result of the compromise, adversaries could access specific customer sessions and steal data and acquire knowledge about the context of interaction with the AI agent, which could later lead to hijacking. This vector may be especially effective in fraudulent and social engineering campaigns when the adversary focuses on acquiring payment information from the victim using some pretext of KYC verification or technical support from a specific financial institution or payment network. Many conversational AI platforms allow users to switch between an AI-assisted operator and a human - the bad actor could intercept the session and control the dialogue further. Exploiting user trust, bad actors could request that victims provide sensitive information or arrange certain actions (for example, confirming an OTP) that could be used in fraudulent schemes. Resecurity forecasts a variety of social engineering schemes that could be orchestrated by abusing and gaining access to trusted conversational AI platforms.

The end victim (consumer) will remain completely unaware if an adversary intercepts the session and will continue to interact with the AI agent, thinking that the session is secure and that the further course of action is legitimate. The adversary may exploit the victim's trust in the AI platform and obtain sensitive information, which could later be used for payment fraud and identity theft.

The issue with retained PII may be observed in breached communications found by a potential adversary in available conversational AI platform data, and their models. For example, according to one of the case studies published by Australian Signals Directorate’s Australian Cyber Security Centre (ASD’s ACSC) in collaboration with international partners, third-party hosted AI systems require comprehensive risk assessment. In November 2023, a team of researchers published the outcomes of their attempts to extract memorised training data from AI language models. One of the applications the researchers experimented with was ChatGPT. In the case of ChatGPT, the researchers found that prompting the model to repeat a word forever led the model to divulge training data at a rate much higher than when behaving as normal. The extracted training data included personally identifiable information (PII).

Third-Party Hosted AI Systems: A Major Risk to the Supply Chain

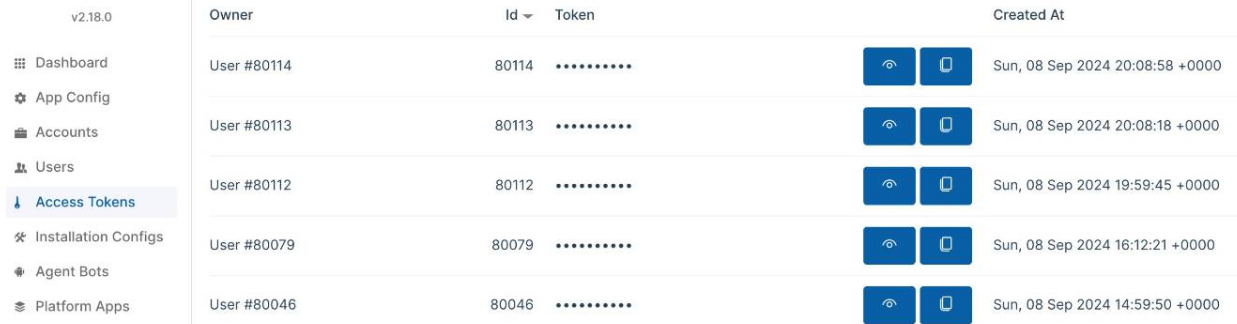

Besides the issue of retained personally identifiable information (PII) stored in communications between the AI agent and end users, bad actors were also able to target access tokens, which enterprises could use for the implementation of the service with API of external services and applications:

Token manipulation may lead to malicious data injection into supported integration channels and negatively impact the end consumers of AI conversational platforms. AI agents' outputs could be integrated into various platforms, and bots could be added to other applications like Discord, WhatsApp, Slack, and Zapier.

Due to the significant penetration of external AI systems into enterprise infrastructure and the processing of massive volumes of data, their implementation without proper risk assessment should be considered an emerging IT supply chain cybersecurity risk.

According to Gartner, third-party AI tools pose data confidentiality risks. As your organization integrates AI models and tools from third-party providers, you also absorb the large datasets used to train those AI models. Your users could access confidential data within others’ AI models, potentially creating regulatory, commercial and reputational consequences for your organization.

Spectrum of Attacks against Al-enabled systems

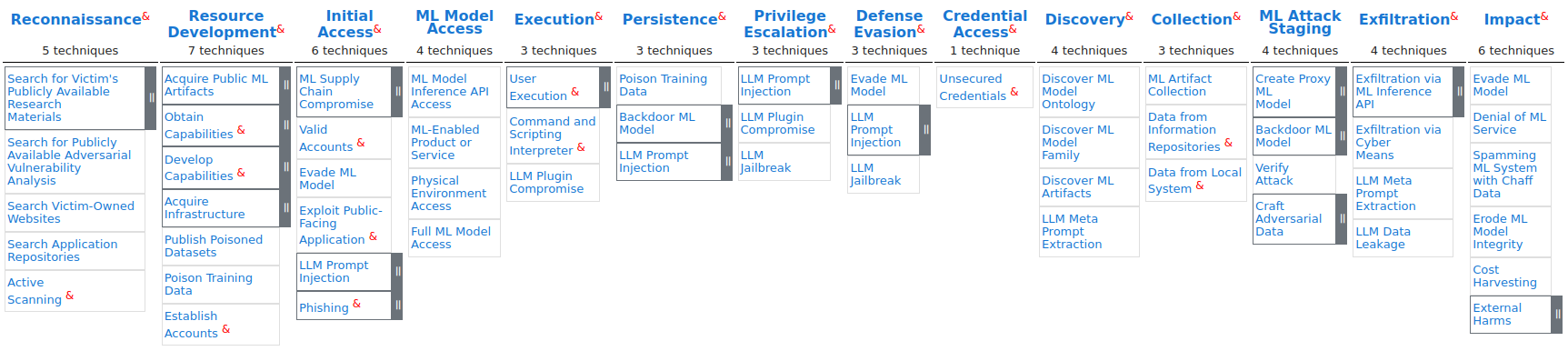

The spectrum of adversary tactics and techniques against Al-enabled systems based on real-world attack observations is defined in the MITRE ATLAS Matrix. It provides a framework for identifying and addressing vulnerabilities in AI systems, which can help to prevent attacks and protect sensitive data, and enables researchers to navigate the landscape of threats to artificial intelligence systems.

Using the MITRE ATLAS Matrix, Resecurity mapped the observed malicious activity to the key TTPs:

- AML.T0012

Valid Accounts

- AML.T0049

Exploit Public-Facing Application

- AML.T0052

Phishing

- AML.T0055

Unsecured Credentials

- AML.T0007

Discovery ML Artifacts

- AML.T0035

ML Artifact Collection

- AML.T0043

Craft Adversarial Data

- AML.T0025

Exfiltration via Cyber Means

- AML.T0024

Exfiltration via ML Interface API

- AML.T0048

External Harms (Financial Impact)

Mitigation Measures

Resecurity highlights the importance of a comprehensive AI trust, risk, security management (TRiSM) program to proactively ensure AI systems are compliant, fair, reliable and protect data privacy. The EU AI Act and other regulatory frameworks in North America, China and India are already establishing regulations to manage the risks of AI applications. For example, the recent PDPC AI Guidelines in Singapore already encourage businesses to be more transparent in seeking consent for personal data use through disclosure and notifications. Businesses have to ensure that AI systems are trustworthy, thereby providing consumers with confidence in how their personal data is used.

According to the Principles for Responsible, Trustworthy and Privacy-Protective Generative AI Technologies published by the Office of the Privacy Commissioner of Canada and other industry regulators, it is critical to conduct assessments, such as Privacy Impact Assessments (PIAs), to identify and mitigate potential or known impacts that a generative AI system (or its proposed use, as applicable) may have on privacy.

According to the Best Practices for Deploying Secure and Resilient AI Systems published by The National Security Agency (NSA), the experts recommend adopting a Zero-Trust (ZT) mindset, which assumes a breach is inevitable or has already occurred.

Considering the observed malicious activity involving compromise of conversational AI platforms and their significant impact on customer privacy, Resecurity may emphasize the importance of secure communications between AI agents and end consumers. This includes minimizing the retention of personally identifiable information (PII) and adopting a proactive approach to supply chain cybersecurity in the context of third-party hosted AI solutions.

Significance

Conversational AI platforms have become a critical element of the modern IT supply chain for major enterprises and government agencies. Their protection will require a balance between traditional cybersecurity measures relevant to SaaS (Software-as-a-Service) and those specialized and tailored to the specifics of AI.

At some point, conversational AI platforms begin to replace traditional communication channels. Instead of "old-school" email messaging, these platforms enable interaction via AI agents that deliver fast responses and provide multi-level navigation across services of interest in near real-time. The evolution of technology has also led to adjustments in tactics by adversaries looking to exploit the latest trends and dynamics in the global ICT market for their own benefit.

Resecurity detected a notable interest from both the cybercriminal community and state actors in conversational AI platforms - due to a considerable number of consumers and massive volumes of information processed during interactions and personalized sessions supported by AI. Cybercriminals will target conversational AI platforms due to the appearance of new consumer-oriented offerings.

For example, this year China launched the prototype of an AI hospital to showcase an innovative approach to healthcare - where virtual nursing assistants and doctors will interact with patients. Such innovations may create significant risks for patients' privacy in the long-term, as conversational AI may transmit and process sensitive information about healthcare data.

Conversational AI is already revolutionizing the banking and fintech industry by automating customer support, providing personalized financial guidance, and enhancing transactional efficiency, improving customer satisfaction and operational effectiveness.

These examples prove the diverse applications of conversational AI, ranging from consumer-facing virtual assistants to enterprise-level automation and support systems. As technology continues to evolve, we can expect to see even more creative use cases for conversational AI in the future, and new cybersecurity threats targeting such technologies and the end users.

References

- Mitigate Emerging Risks and Security Threats from AI Agents

https://securitymea.com/2024/09/10/mitigate-emerging-risks-and-security-threats-from-ai-agents/

- Tackling Trust, Risk and Security in AI Models

https://www.gartner.com/en/articles/what-it-takes-to-make-ai-safe-and-effective

- Principles for responsible, trustworthy and privacy-protective generative AI technologies

https://www.priv.gc.ca/en/privacy-topics/technology/artificial-intelligence/gd_principles_ai/

- Engaging with Artificial Intelligence (AI)

https://media.defense.gov/2024/Jan/23/2003380135/-1/-1/0/CSI-ENGAGING-WITH-ARTIFICIAL-INTELLIGENCE.P...