Digital Echo Chambers and Erosion of Trust - Key Threats to the US Elections

Cyber Threat Intelligence

Shaping Public Narratives

Social media is crucial in shaping public narratives around candidates and issues. The way information is presented and discussed on these platforms can influence public perception and affect election results. For instance, negative narratives about candidates can gain traction quickly, impacting their support.

As traditional media consumption declines, many voters turn to social media for news and election updates. This shift drives more people to create accounts, particularly as they seek to engage with political content and discussions relevant to the elections. Foreign adversaries exploit this aspect, running influence campaigns to manipulate public opinion. To do that, they leverage accounts with monikers reflecting election sentiments and the names of political opponents to mislead voters.

Such activity has been identified not only in social media networks with headquarters in the US, but also in foreign jurisdictions and alternative digital media channels. The actors may operate in less moderated environments, leveraging foreign social media and resources, which are also read by a domestic audience, and the content from which could be easily distributed via mobile and email.

Resecurity has detected a substantial increase in the distribution of political content related to the 2024 US elections through social media networks, particularly from foreign jurisdictions.

Formation of Echo Chambers

Social media can create echo chambers where users are exposed primarily to information that reinforces their existing beliefs. This phenomenon can polarize public opinion, as individuals become less likely to encounter opposing viewpoints. Such environments can intensify partisan divides and influence voter behavior by solidifying and reinforcing biases.

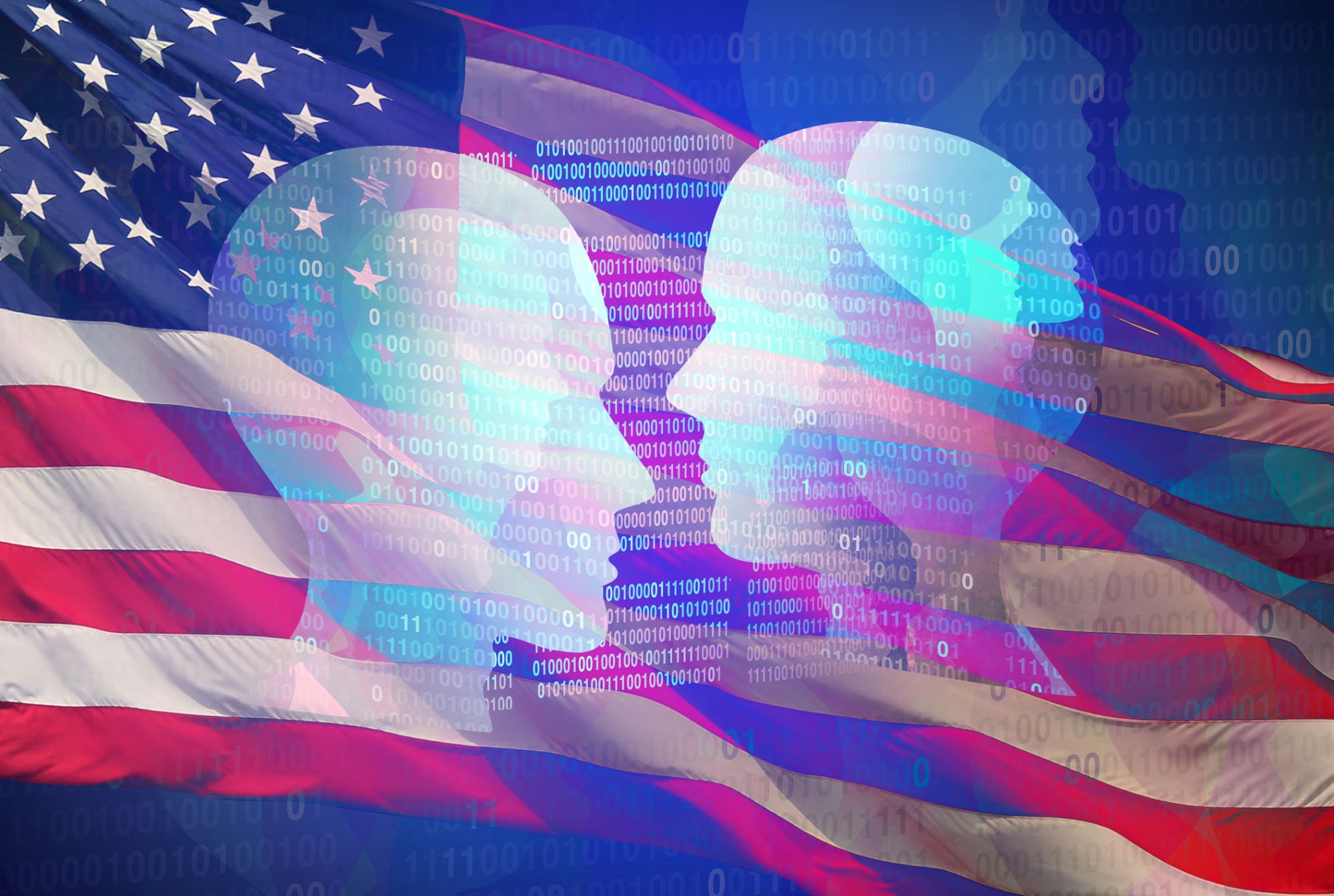

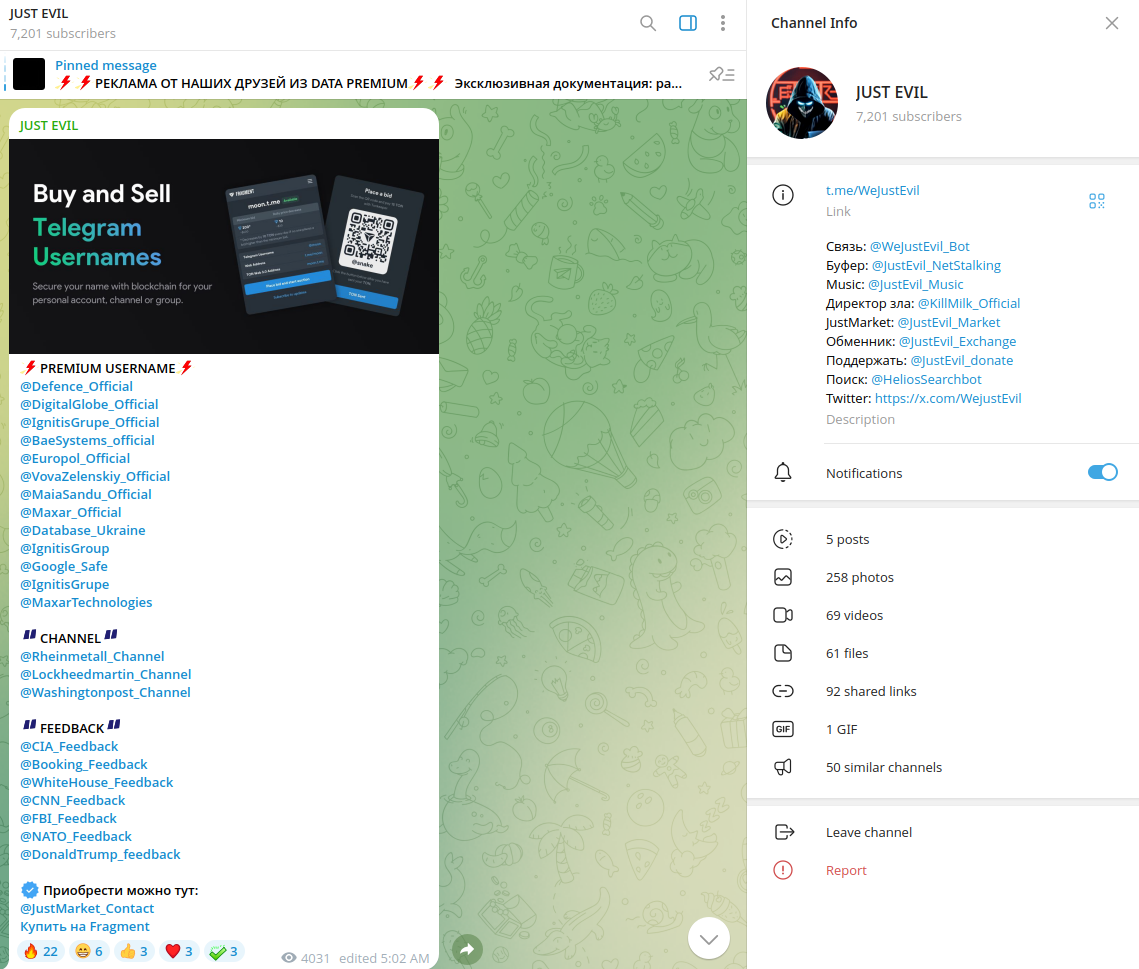

For example, on October 5, 2024, Resecurity identified multiple accounts for sale on Telegram impersonating government entities, including the White House, the FBI and the CIA, as well as popular media outlets such as CNN and the Washington Post. One of the accounts impersonating Donald Trump, 'DonaldTrump_feedback,' was also put up for sale. The accounts were offered for sale on Fragment, a marketplace for Telegram accounts.

Multiple Telegram accounts with the U.S. elections narrative have been identified; they have been registered and offered for sale between 10 TON ($51) and 2028 TON ($2290). Such accounts will be of great interest to political consultancies and foreign adversaries aiming to target a specific segment of the audience.

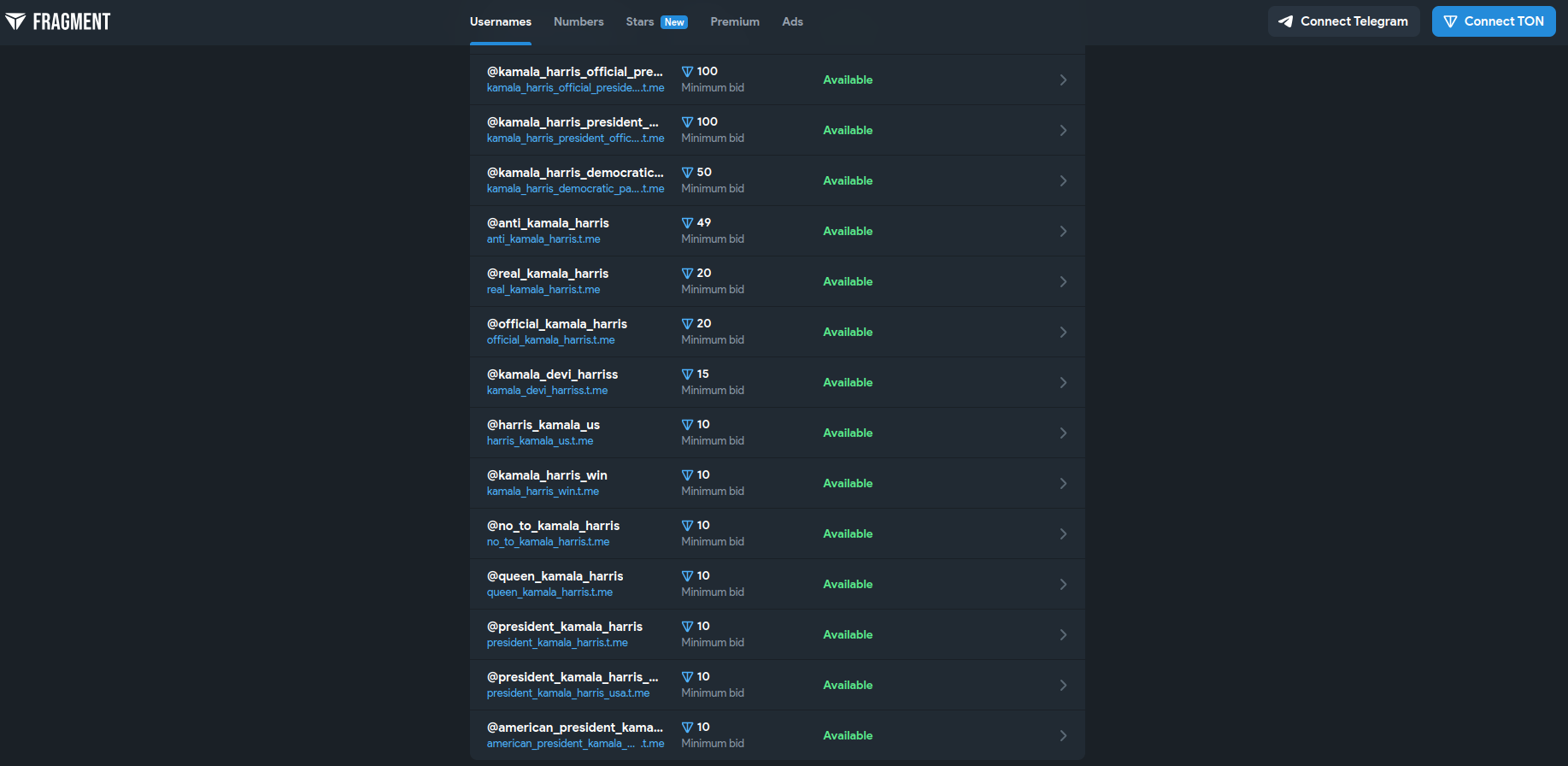

Accounts under the name Kamala Harris were also offered for sale:

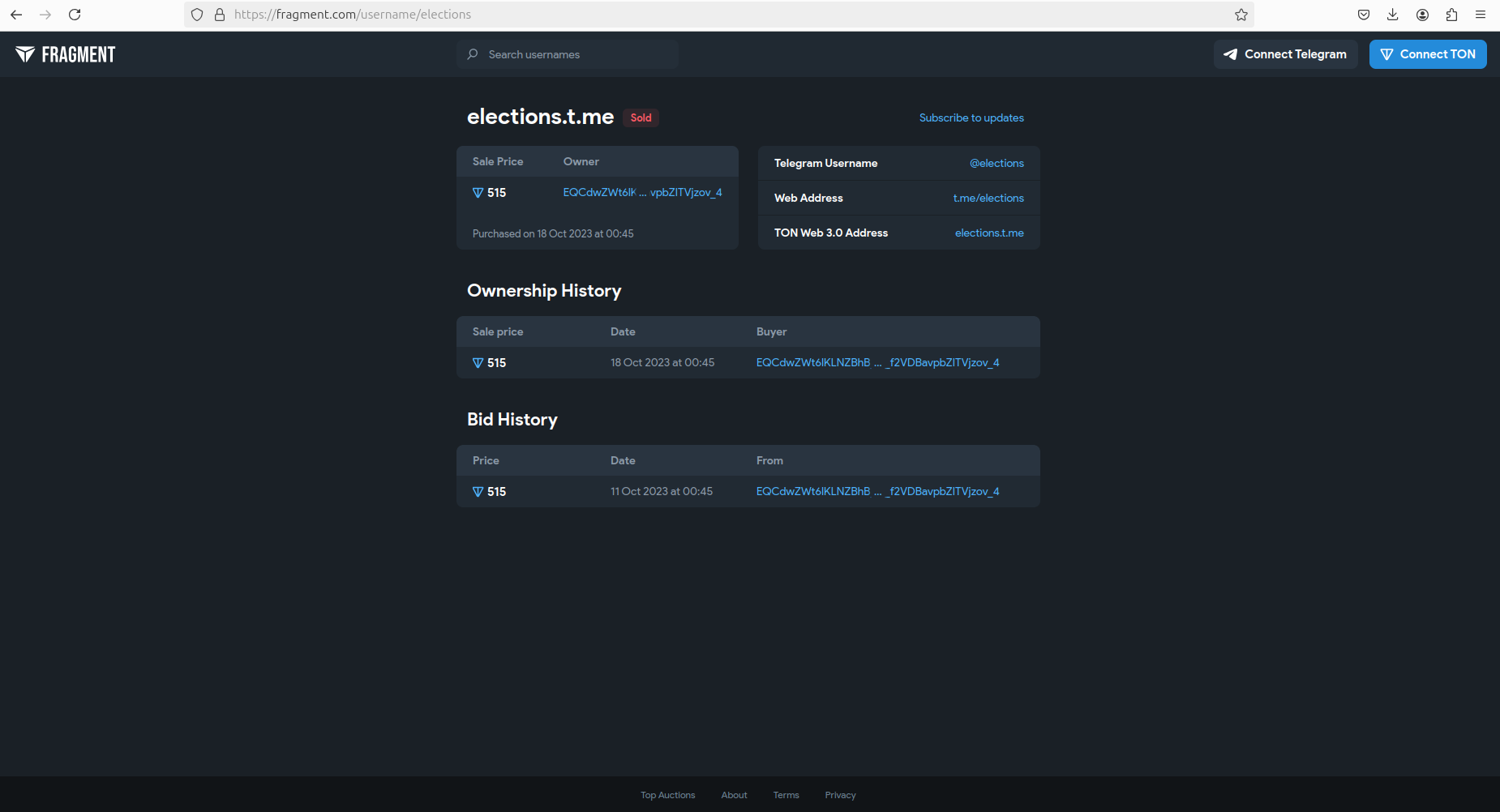

One account (@elections) was sold on October 18, 2023 - almost a year before the elections.

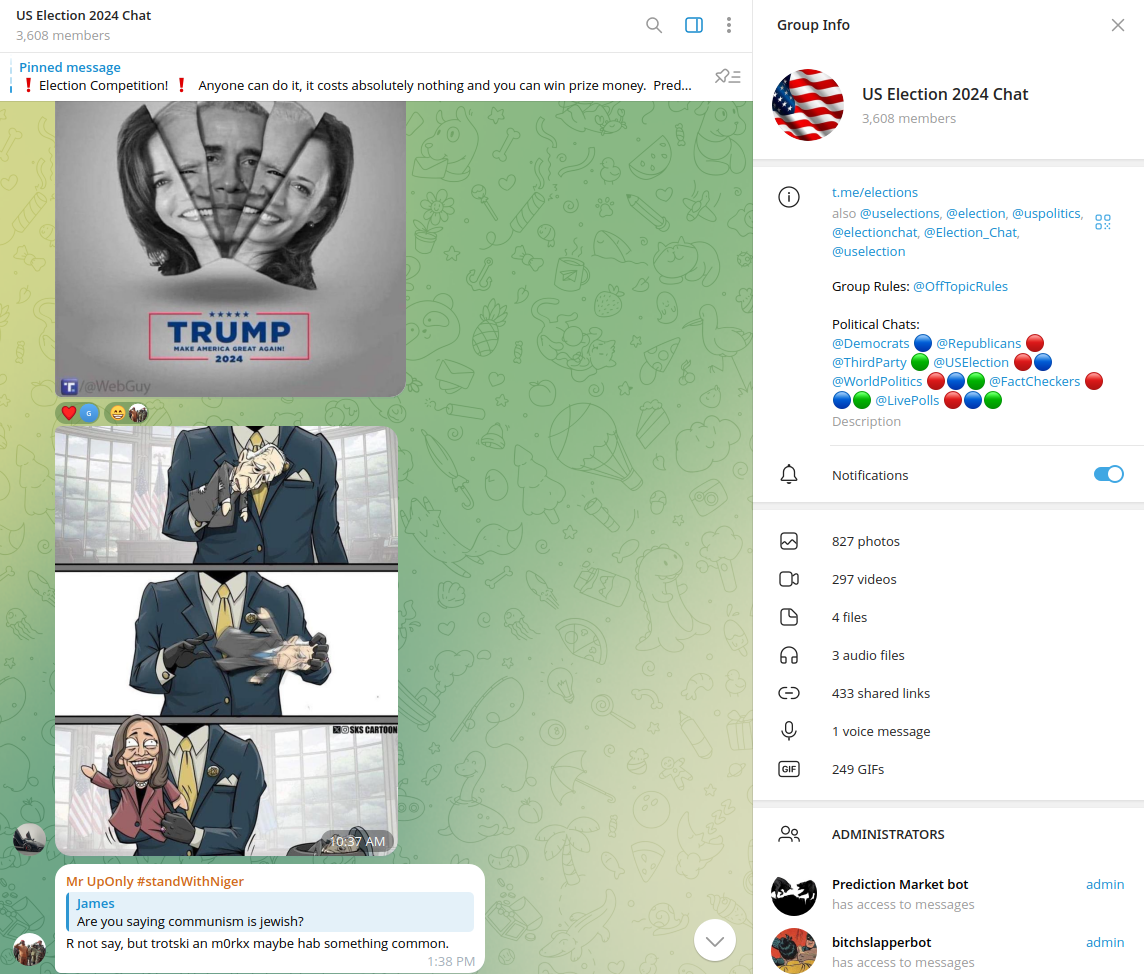

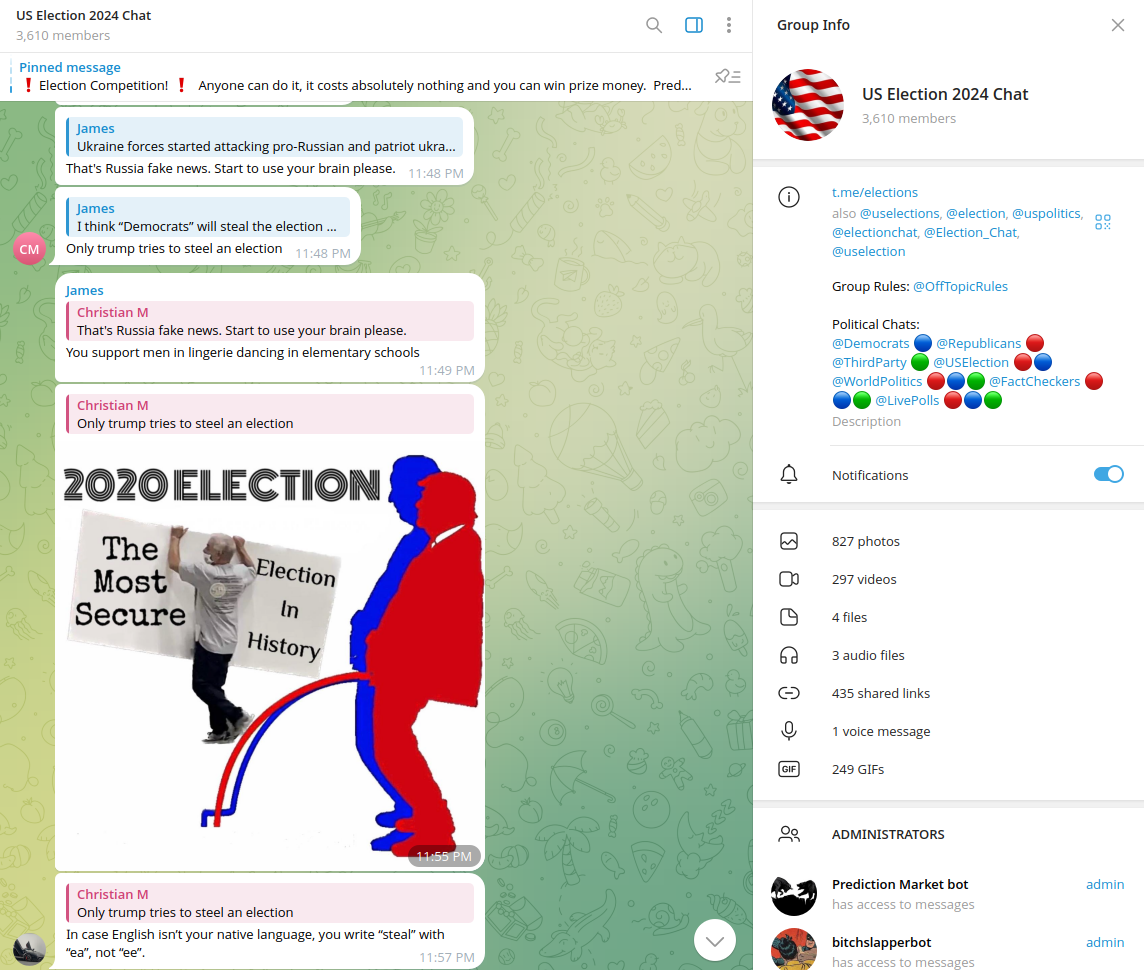

Currently, this account spreads content designed to shape negative opinions about political candidates and discredit U.S. elections. One of the foreign adversaries' critical goals is to plant social polarization and distrust in electoral integrity. This is a crucial component of these campaigns. Often, these campaigns promote and discourage both candidates, as they do not intend to promote one candidate over the other. They plan to sow distrust in the election process and encourage animosity among the constituents of the losing candidate against the winning candidate and their supporters.

Multiple postings have been identified that outline the elections as insecure or manipulated, using the profiles of candidates and other notable figures.

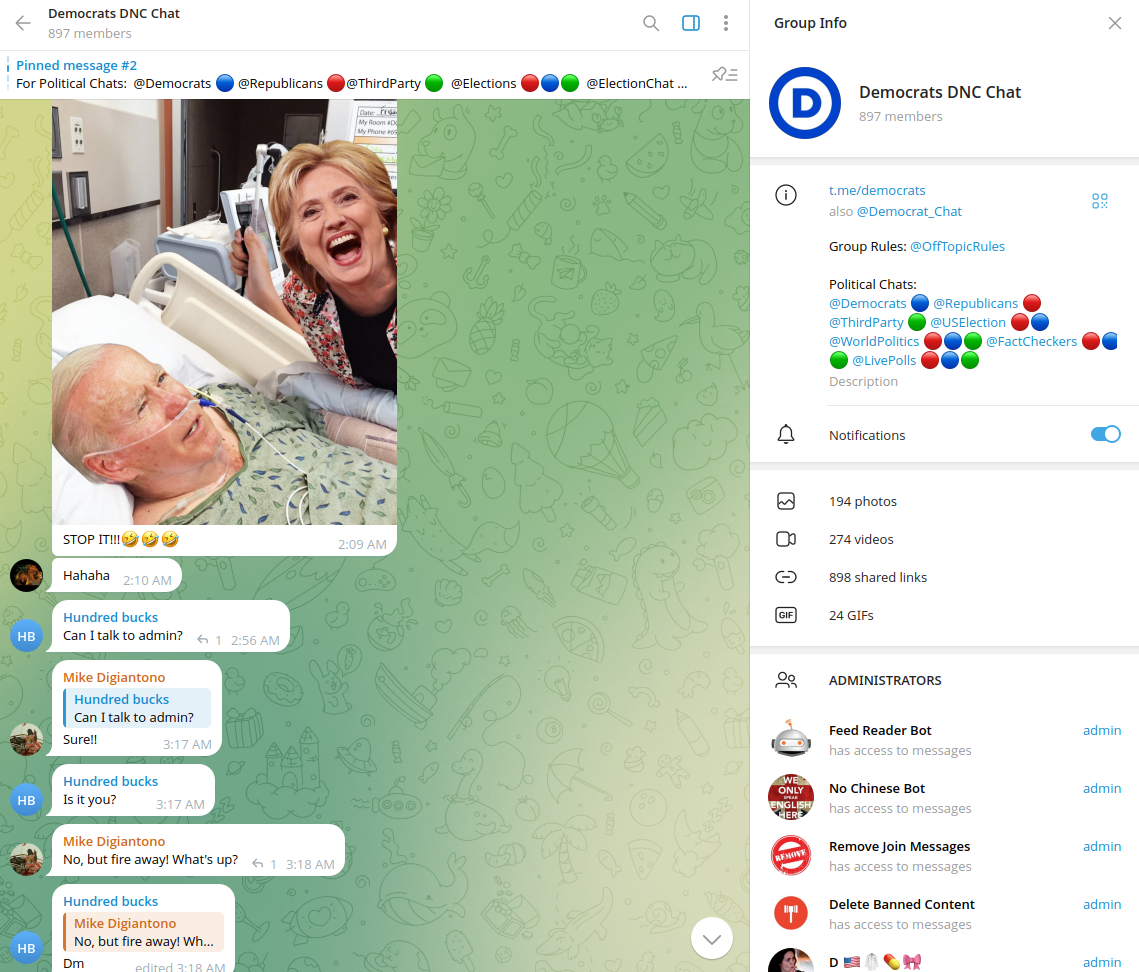

Resecurity identified several associated groups promoting the "echo" narrative - regardless of the group's main profile. For example, a group that aims to support the Democratic Party contained content of an opposite and discrediting nature.

Notably, most user profiles in such groups contain vague descriptions and patterns characteristic of 'troll factories'.

Example:

Misinformation and Influence Operations

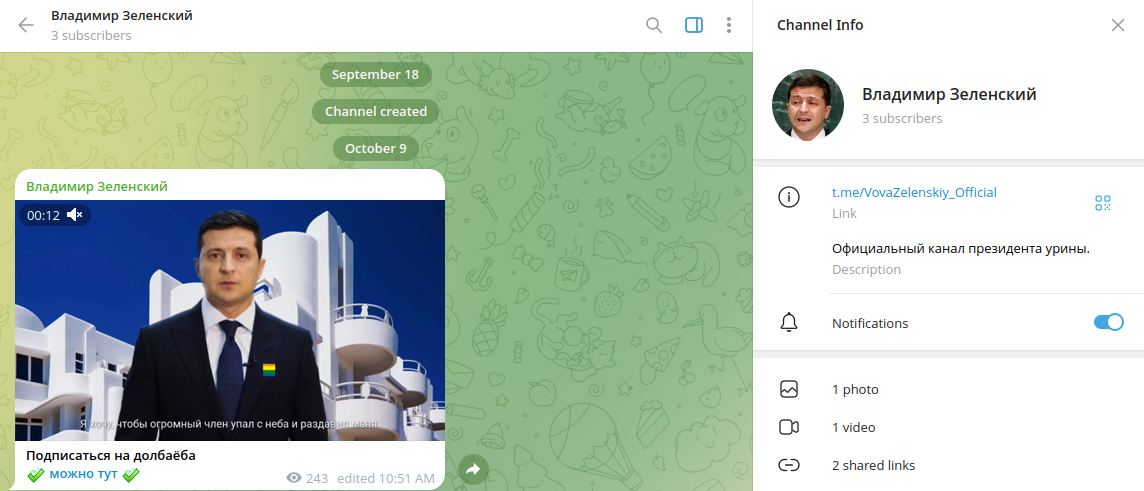

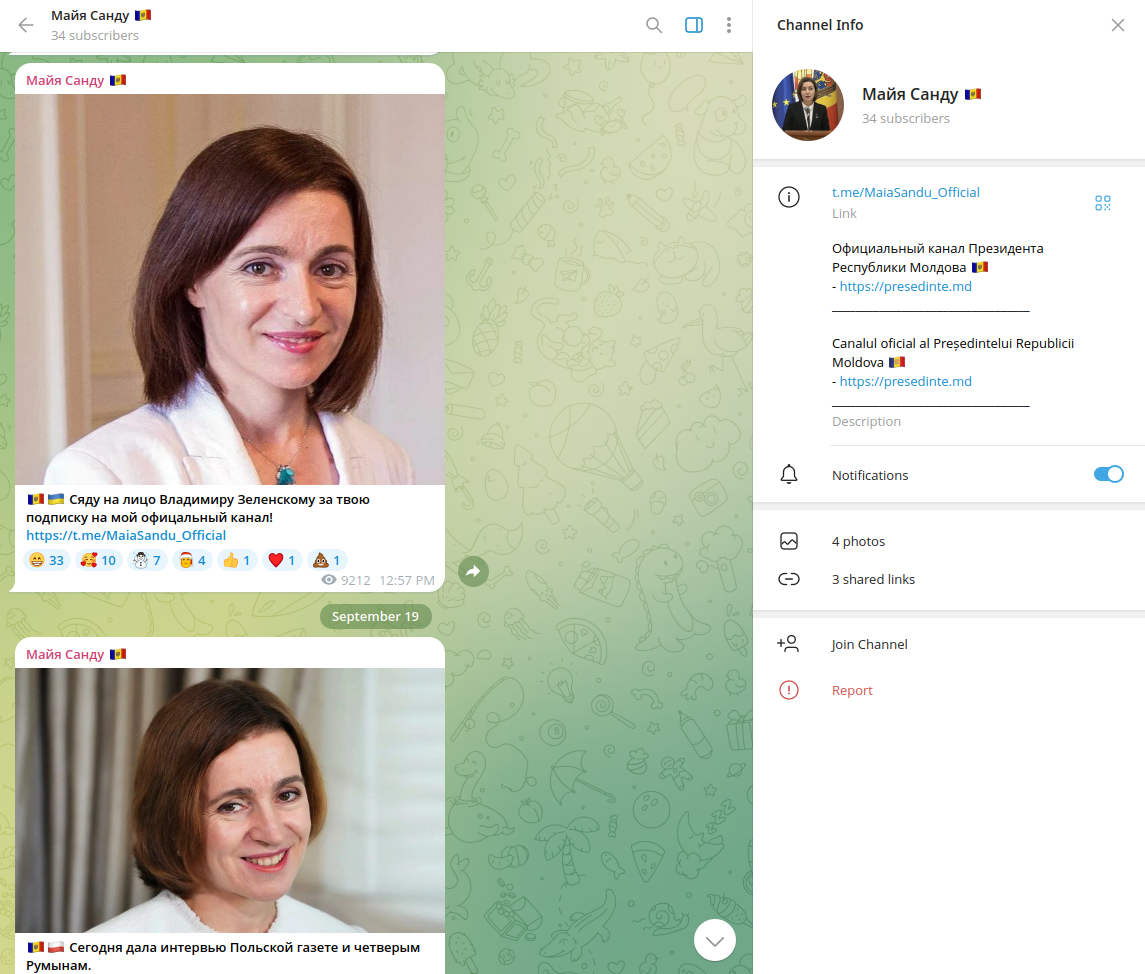

Similar identified accounts are being used to target Volodymyr Zelenskyy, the President of Ukraine, and Maia Sandu, a Moldovan politician who has been President of Moldova since December 24, 2020, becoming the country's first female president.

Analysts warned of a “large-scale hybrid war” by foreign adversaries against Moldova - as it moved toward a presidential election (Sunday, October 20, 2024), during which a referendum on future relations with the European Union was held. Notably, the Telegram account designed to discredit Moldova’s incumbent president, Maia Sandu, who supports the country’s integration with Europe, appeared one month before the referendum. “It’s unprecedented in terms of complexity,” says Ana Revenco, Moldova’s former interior minister, now in charge of the country’s new Center for Strategic Communication and Combating Disinformation. Similar activities have also been identified on TikTok and other social media platforms. “This shows us our collective vulnerability,” she says.

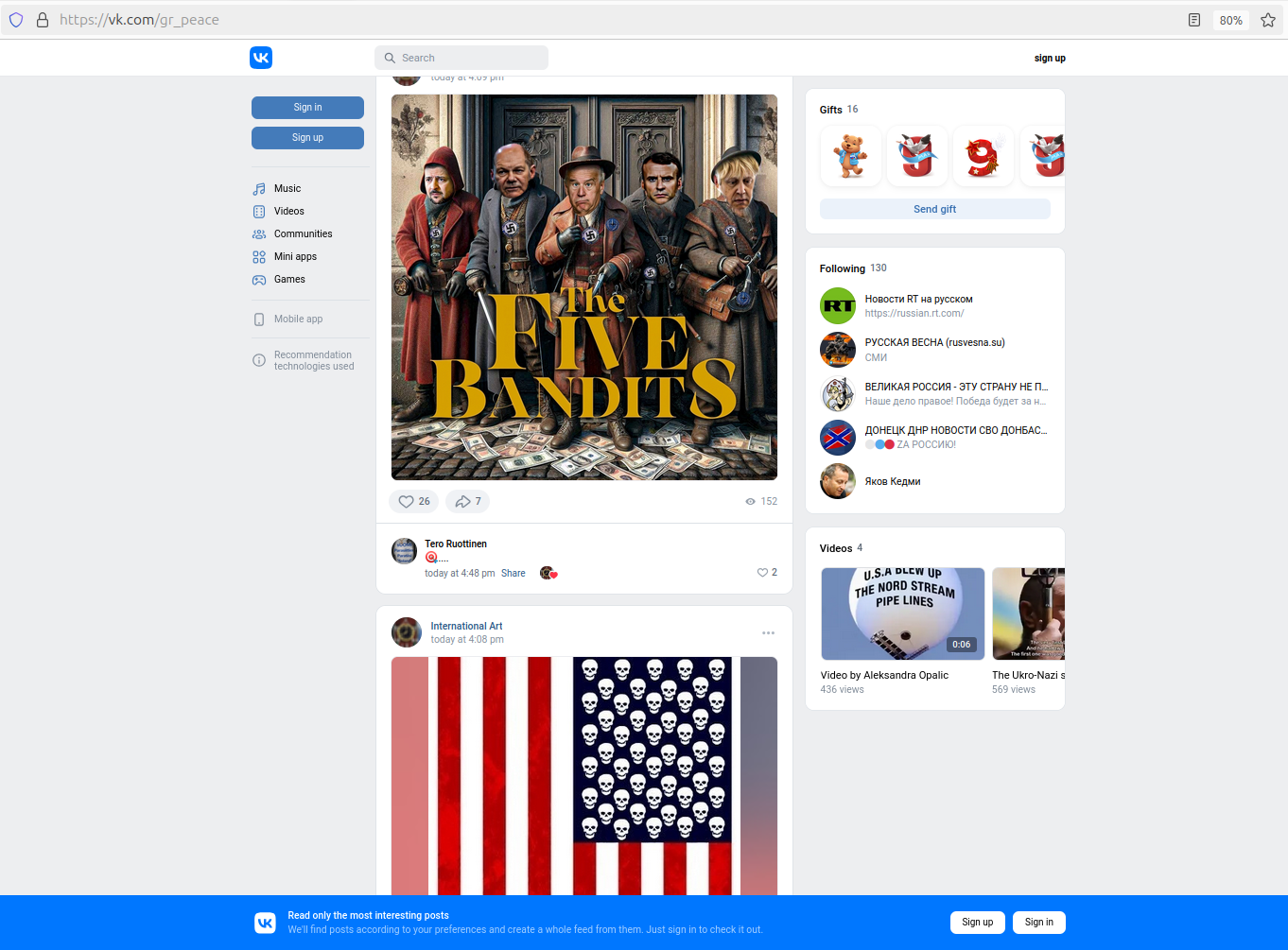

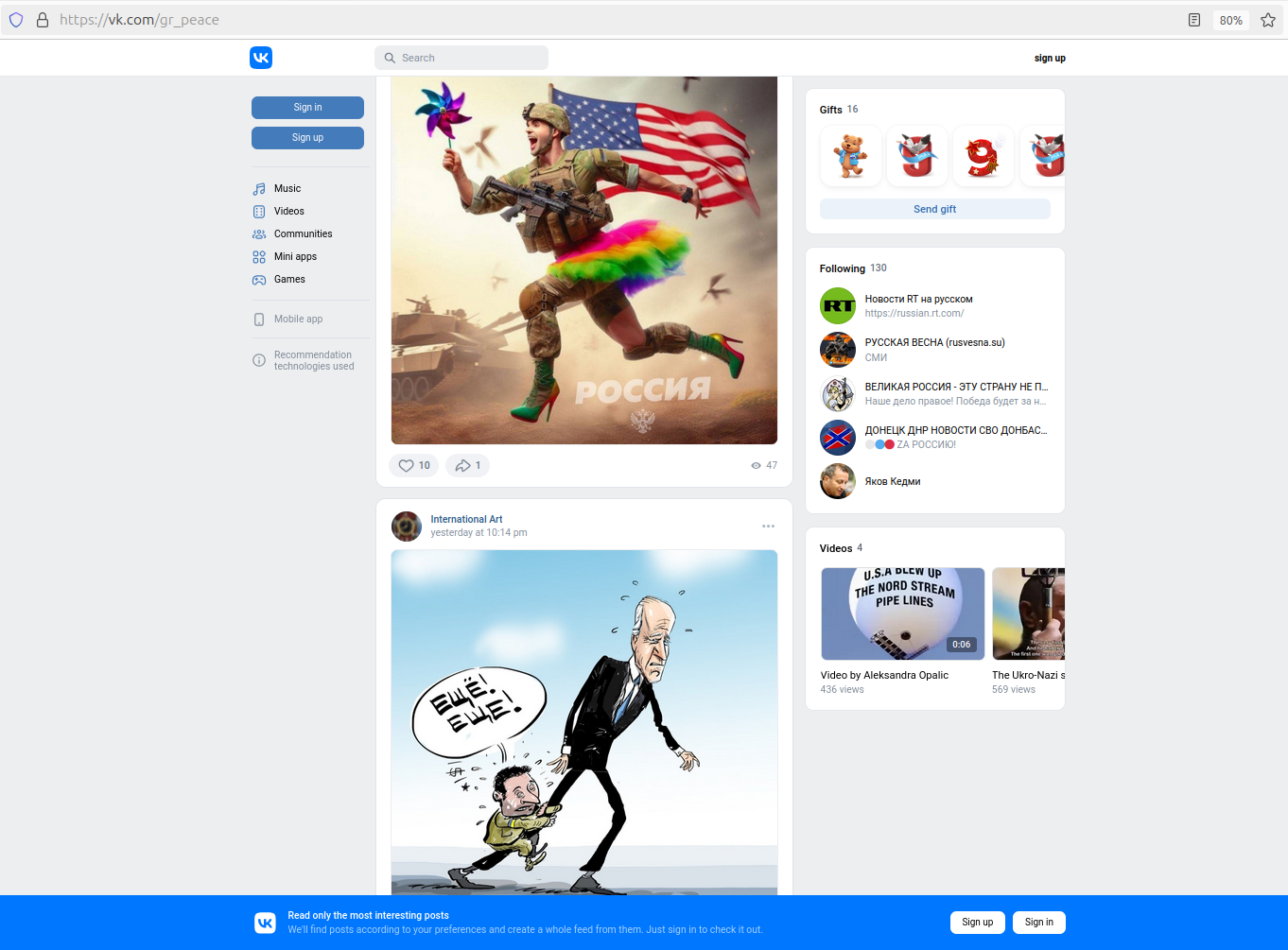

Resecurity has observed similar activity from the Vkontakte (VK) social network. Our team has assigned this activity the codename "Psy Ops, Inc.", which is the name of one of the accounts identified earlier that planted such content online.

Resecurity identified several clusters of accounts with patterns of a 'troll factory' that promotes negative content against the U.S. and EU leadership via VK, written in various languages including French, Finnish, German, Dutch, and Italian.

The content is mixed with geopolitical narratives of an antisemitic nature, which should violate the network's existing Terms and Conditions. The accounts remain active and constantly release updates, which may highlight the organized effort to produce such content and make it available online. A month ago, the United States Department of Justice charged threat actors working for a foreign state-controlled media outlet in a $10 million scheme to create and distribute content to U.S. audiences with hidden Russian government messaging.

Significance

Foreign adversaries' efforts underscore the need for robust measures to protect election integrity and democracy. As technology advances, developing effective strategies for addressing these challenges is crucial. This includes implementing clear regulations, enhancing platform policies, and promoting public awareness about the potential risks of manipulated media, in addition to a robust effort to identify and disrupt the infrastructure to conduct these misinformation campaigns.

Launching misinformation campaigns via social media and web resources in foreign jurisdictions, adversaries often bypass existing moderation controls of platforms within the political campaign's jurisdiction and affect specific audience segments. Such content is available and indexed by major search engines and can be disseminated through digital, mobile, and alternative platform-specific channels worldwide. Resecurity

forecasts that foreign actors will continue to accelerate their use

of such tactics, considering the hardened oversight of social media

companies based in the U.S., which could limit foreign adversaries'

freedom of action. Even though such activity may be focused on

targeting specific political candidates, it also benefits the

adversaries as it creates a negative public image for the U.S.

internationally and distrust in the democratic institutions that it

promotes worldwide.

Some of the existing T&Cs of social media need a clear distinction between political satire, parody involving candidates, and content with signs of influence operations and misinformation planted by foreign actors on political topics. There is a thin line between these two principally different topics that foreign actors can exploit to discredit candidates and elections. This research highlights the difference between the right of any US person to express their own opinion, including satire on political topics, which the U.S. First Amendment protects, and the malicious activity of foreign actors funded by foreign governments to plant discrediting content and leverage manipulated media to undermine elections and disenfranchise voters. For example, content identified by Resecurity in foreign social media included signs of political satire but also antisemitic and geopolitical narratives beneficial to foreign states to discredit US foreign policy and elections. All postings were made by bots, not real people, which may also highlight the malicious intention. The proliferation of deepfakes and similar content planted by foreign actors poses challenges to the functioning of democracies. Such communications can deprive the public of the accurate information it needs to make informed decisions in elections.

Conclusion

While the threat from foreign actors using media manipulation is growing, intelligence assessments indicate that these efforts, though significant, are not yet revolutionary in their impact. Nevertheless, the U.S. agencies remain vigilant, continuously updating their assessments and working to safeguard the integrity of the 2024 election against these evolving threats. By understanding these issues and advocating for responsible media practices, governments, platforms, and the public can work together to build a more informed and secure digital environment - that upholds the integrity of elections and defends against the exploitation of emerging technologies.

References

- Echo chambers, filter bubbles, and polarisation: a literature review

https://reutersinstitute.politics.ox.ac.uk/echo-chambers-filter-bubbles-and-polarisation-literature-...

- The Echo Chamber Effect: Social Media’s Role in Political Bias

https://yipinstitute.org/article/the-echo-chamber-effect-social-medias-role-in-political-bias

- A New Campaign Arena - The Impacts of Digital Echo Chambers in Elections

https://www.youngausint.org.au/post/a-new-campaign-arena-the-impacts-of-digital-echo-chambers-in-ele...

- What is a Social Media Echo Chamber?

https://advertising.utexas.edu/news/what-social-media-echo-chamber

- Voting in the Echo Chamber? Patterns of Political Online Activities and Voting Behavior

https://onlinelibrary.wiley.com/doi/10.1111/spsr.12498

- Political Satire Is Protected Speech – Even If You Don’t Get the Joke

https://www.eff.org/deeplinks/2021/01/political-satire-protected-speech-even-if-you-dont-get-joke

- Regulating AI Deepfakes and Synthetic Media in the Political Arena

https://www.brennancenter.org/our-work/research-reports/regulating-ai-deepfakes-and-synthetic-media-...